Chapter 5 Reccurent Neural Networks (RNN)

5.1 Understanding Recurrent Neural Network

5.2 RNN with Keras

library(keras)

max_features <- 10000 # Number of words to consider as features

maxlen <- 500 # Cuts off texts after this many words (among the max_features most common words)

batch_size <- 32

cat("Loading data...\n")## Loading data...# load data

imdb <- dataset_imdb(num_words = max_features)

c(c(input_train, y_train), c(input_test, y_test)) %<-% imdb

cat(length(input_train), "train sequences\n")## 25000 train sequences## 25000 test sequences# pad sequences

input_train <- pad_sequences(input_train, maxlen = maxlen)

input_test <- pad_sequences(input_test, maxlen = maxlen)

cat("input_train shape:", dim(input_train), "\n")## input_train shape: 25000 500let’s train the model

model <- keras_model_sequential() %>%

layer_embedding(input_dim = max_features, output_dim = 32) %>%

layer_simple_rnn(units = 32) %>%

layer_dense(units = 1, activation = "sigmoid")

model %>% compile(

optimizer = "rmsprop",

loss = "binary_crossentropy",

metrics = c("acc")

)

history <- model %>% keras::fit(

input_train, y_train,

epochs = 10,

batch_size = 128,

validation_split = 0.2

)

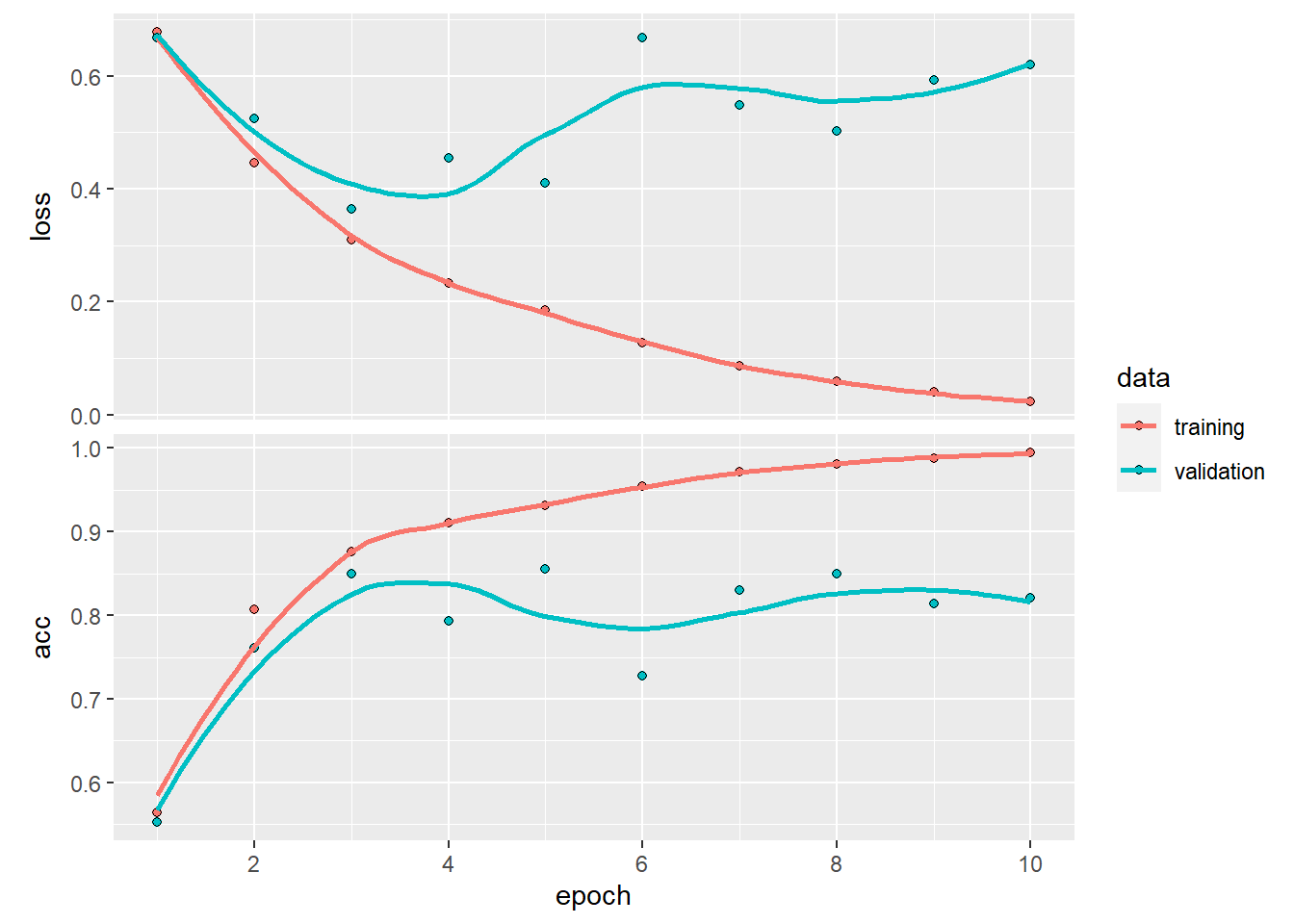

plot(history)## `geom_smooth()` using formula 'y ~ x'

5.3 LSTM with Keras

model <- keras_model_sequential() %>%

layer_embedding(input_dim = max_features, output_dim = 32) %>%

layer_lstm(units = 32) %>%

layer_dense(units = 1, activation = "sigmoid")

model %>% compile(

optimizer = "rmsprop",

loss = "binary_crossentropy",

metrics = c("acc")

)

history <- model %>% keras::fit(

input_train, y_train,

epochs = 5,

batch_size = 128,

validation_split = 0.2

)

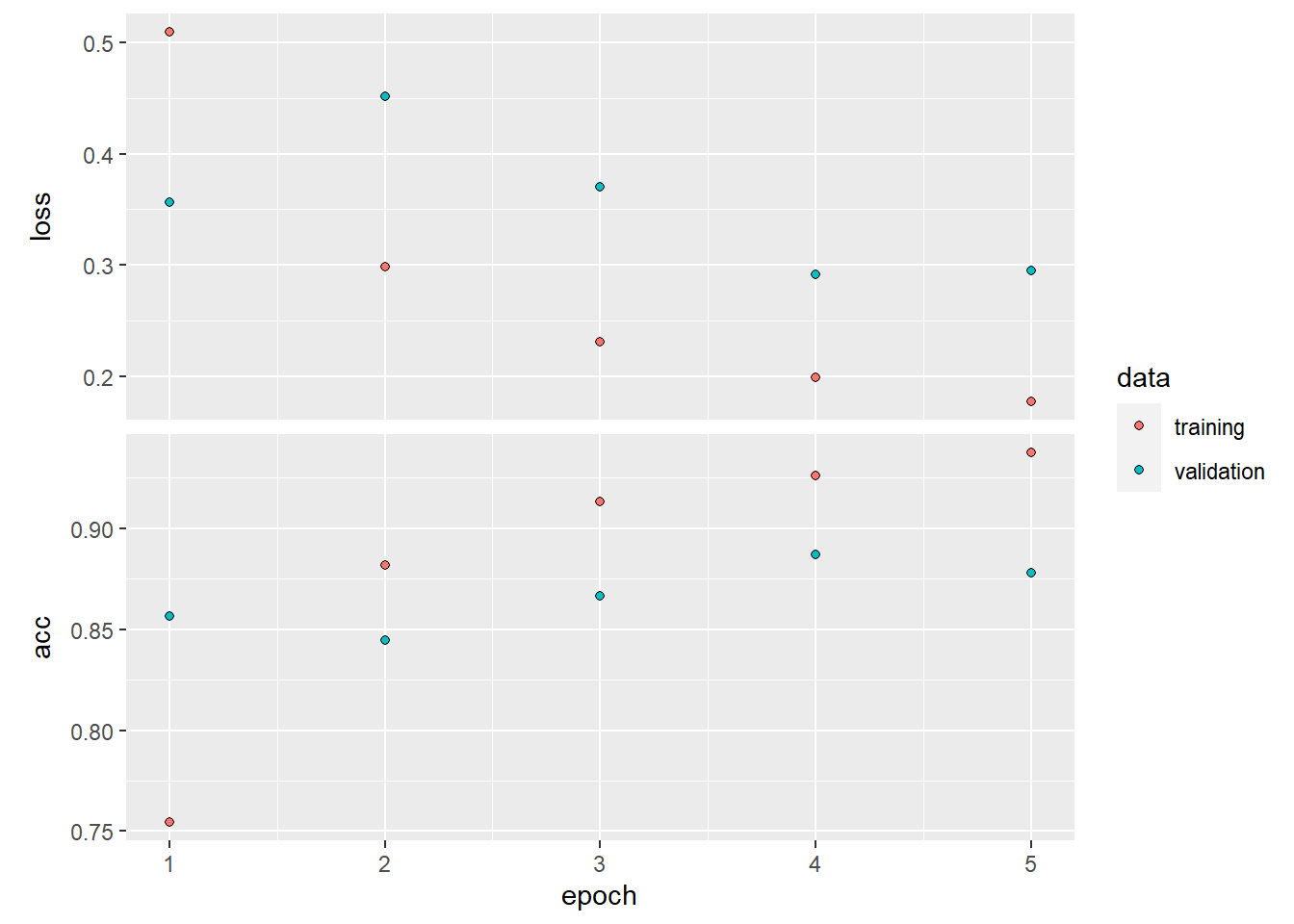

plot(history)